Module 6--Working with Geometries

Because of COVID, we were only required to do the last two modules. I completed M7 first, because it seemed easier.

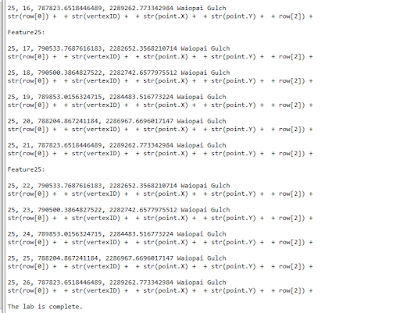

In this module, I had to work with nested loops, search cursors, For Loops, and writing to text files for a system of rivers in Hawaii. The lab was very confusing and you definitely needed to use a pseudocode and to break up the lab into small parts. However, the exercise provided a base of knowledge from which to build upon.

Below are the results of the text file, which returned the Feature ID, Vertex ID, X and Y Coordinate, and the name of the feature:

|

| Screenshot Showing OID, Vertex, X/Y Coordinates, and Name |

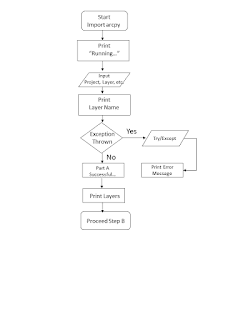

Below is the pseudocode:

Start

Import arcpy

Import env

from arcpy

overwrite

output = true

workspace =

“S:\GISProgramming\Module6\Data”

new text file = “rivers_TJD.txt” (use the

write function, “w”)

fc = “rivers.shp”

cursor =

arcpy.da.SearchCursor(fc, [OID@, SHAPE@, NAME])

use for loop through the cursor for each point

vertex = 0

vertex += 1

f.write the

results to the text file

print results

close text

file

delete row

delete cursor

print statement:

The lab is complete

End

As always, take it one step at a time and look closely at indents, spaces, and other small issues to prevent syntax errors. Ensure you enable the overwrite function so you do not get the error code if you have to start another portion of the script again. Remember to think of the information you write as a table of columns and rows. Of course, the columns are the headers, OID, Vertex, X Coord, Y Coord, Name, etc. The rows are the actual features. Therefore, when you iterate over the loop, you are using {} to identify each column (e.g., {0} will be OID, {1} will be vertex, etc.).